Ok Google, What Am I Doing? Acoustic Activity Recognition Bounded by Conversational Assistant Interactions

ABSTRACT

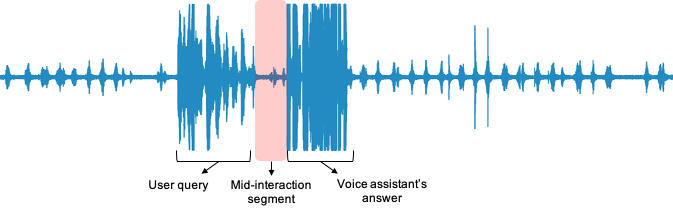

Conversational assistants in the form of stand-alone devices such as Amazon Echo and Google Home have become popular and embraced by millions of people. By serving as a natural interface to services ranging from home automation to media players, conversational assistants help people perform many tasks with ease, such as setting times, playing music and managing to-do lists. While these systems offer useful capabilities, they are largely passive and unaware of the human behavioral context in which they are used. In this work, we explore how off-the-shelf conversational assistants can be enhanced with acoustic-based human activity recognition by leveraging the short interval after a voice command is given to the device. Since always-on audio recording can pose privacy concerns, our method is unique in that it does not require capturing and analyzing any audio other than the speech-based interactions between people and their conversational assistants. In particular, we leverage background environmental sounds present in these short duration voice-based interactions to recognize activities of daily living. We conducted a study with 14 participants in 3 different locations in their own homes. We showed that our method can recognize 19 different activities of daily living with average precision of 84.85% and average recall of 85.67% in a leave-one-participant-out performance evaluation with 30-second audio clips bounded by the voice interactions.

FULL CITATION

Rebecca Adaimi, Howard Yong, and Edison Thomaz. 2021. Ok Google, What Am I Doing? Acoustic Activity Recognition Bounded by Conversational Assistant Interactions. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 5, 1, Article 2 (March 2021), 24 pages. DOI:https://doi.org/10.1145/3448090