AudioIMU: Enhancing Inertial Sensing-Based Activity Recognition with Acoustic Models

ABSTRACT

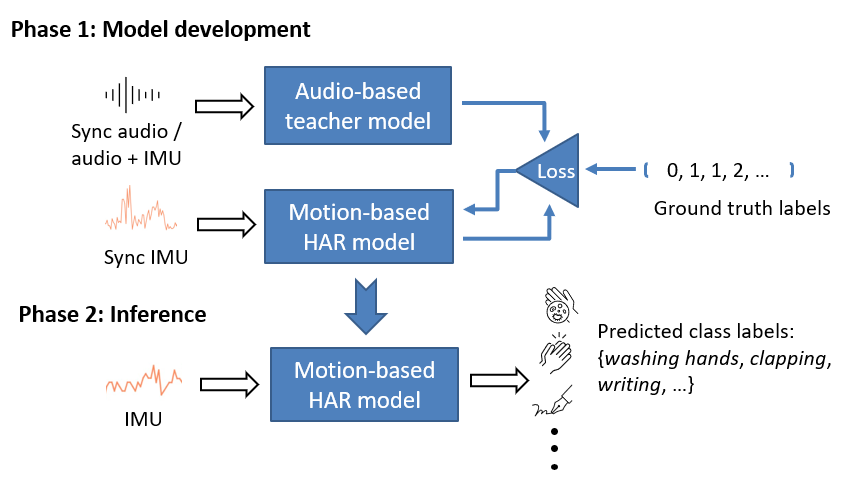

Modern commercial wearable devices are widely equipped with inertial measurement units (IMU) and microphones. The motion and audio signals captured by these sensors can be used for recognizing a variety of user physical activities. Compared to motion data, audio data contains rich contextual information of human activities, but continuous audio sensing also poses extra data sampling burdens and privacy issues. Given such challenges, this paper studies a novel approach to augment IMU models for human activity recognition (HAR) with the superior acoustic knowledge of activities. Specifically, we propose a teacher-student framework to derive an IMU-based HAR model. Instead of training with motion data alone, an advanced audio-based teacher model is incorporated to guide the student HAR model. Once trained, the HAR model only takes as inputs motion data for inference. Based on a semi-controlled study with 15 participants, we show that an IMU model augmented with the proposed framework outperforms the original baseline model without augmentation (74.4% versus 70.0% accuracy) for recognizing 23 activities of daily living. We further discuss a few insights regarding the difference of model performance with and without our framework and possible trade-offs for actual deployment.

FULL CITATION

Dawei Liang, Guihong Li, Rebecca Adaimi, Radu Marculescu, and Edison Thomaz. 2022. AudioIMU: Enhancing Inertial Sensing-Based Activity Recognition with Acoustic Models. In Proceedings of the 2022 ACM International Symposium on Wearable Computers (ISWC '22). Association for Computing Machinery, New York, NY, USA, 44–48. https://doi.org/10.1145/3544794.3558471