Leveraging Sound and Wrist Motion to Detect Activities of Daily Living with Commodity Smartwatches

ABSTRACT

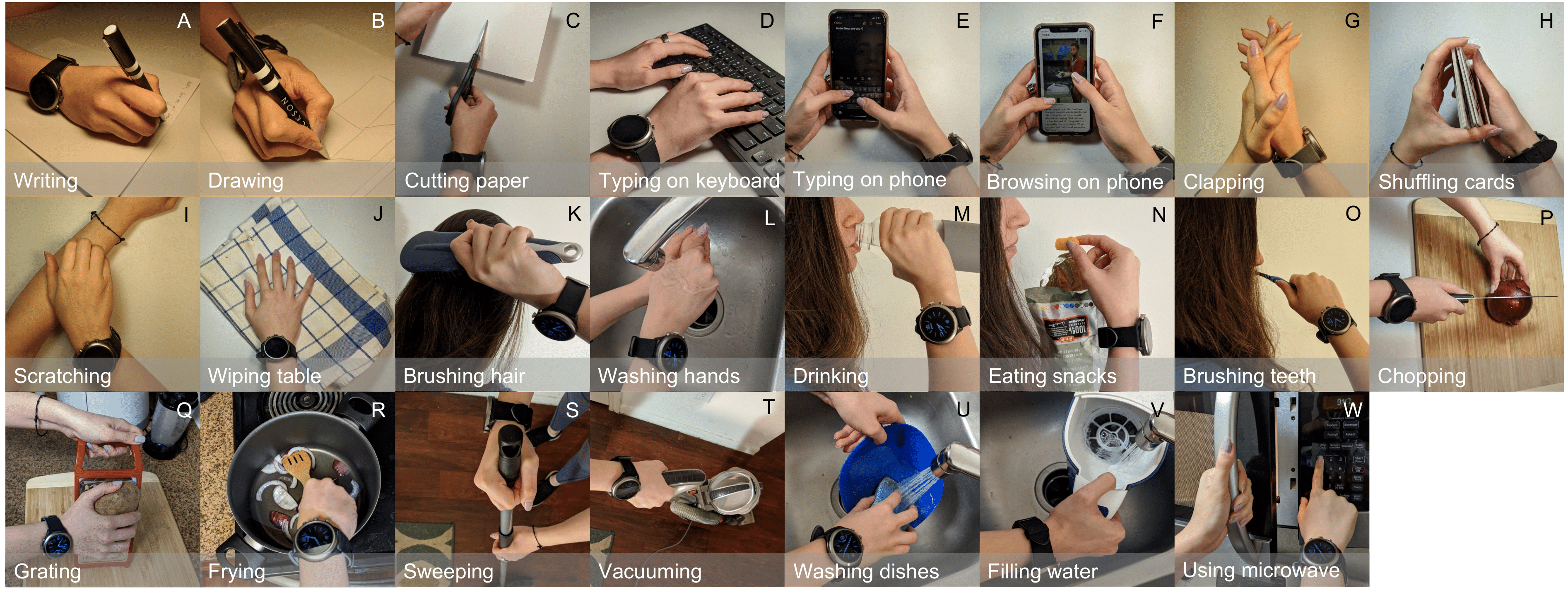

Automatically recognizing a broad spectrum of human activities is key to realizing many compelling applications in health, personal assistance, human-computer interaction and smart environments. However, in real-world settings, approaches to human action perception have been largely constrained to detecting mobility states, e.g., walking, running, standing. In this work, we explore the use of inertial-acoustic sensing provided by off-the-shelf commodity smartwatches for detecting activities of daily living (ADLs). We conduct a semi-naturalistic study with a diverse set of 15 participants in their own homes and show that acoustic and inertial sensor data can be combined to recognize 23 activities such as writing, cooking, and cleaning with high accuracy. We further conduct a completely in-the-wild study with 5 participants to better evaluate the feasibility of our system in practical unconstrained scenarios. We comprehensively studied various baseline machine learning and deep learning models with three different fusion strategies, demonstrating the benefit of combining inertial and acoustic data for ADL recognition. Our analysis underscores the feasibility of high-performing recognition of daily activities using inertial-acoustic data from practical off-the-shelf wrist-worn devices while also uncovering challenges faced in unconstrained settings. We encourage researchers to use our public dataset to further push the boundary of ADL recognition in-the-wild.

FULL CITATION

Sarnab Bhattacharya, Rebecca Adaimi, and Edison Thomaz. 2022. Leveraging Sound and Wrist Motion to Detect Activities of Daily Living with Commodity Smartwatches. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 6, 2, Article 42 (June 2022), 28 pages. DOI:https://doi.org/10.1145/3534582